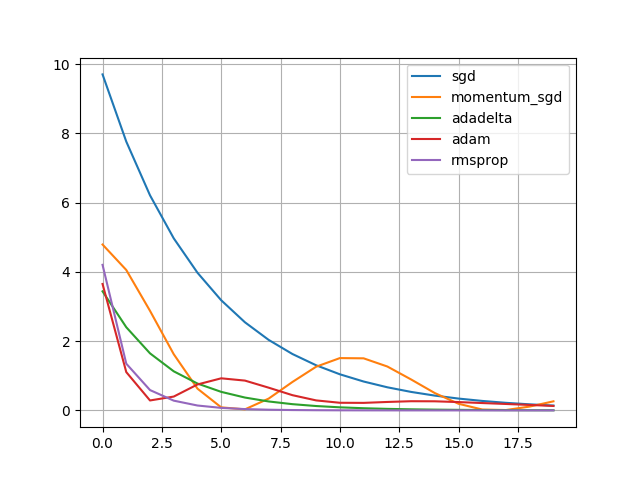

様々な最適化関数 - SGD , Momentum SGD , AdaGrad , RMSprop , AdaDelta , Adam

qiitaの次のurlが、数式付きで分かりやすいです

Optimizer : 深層学習における勾配法について - Qiita

pytorchによる最適化関数(勾配法)

以下の通り

#!/usr/local/python3/bin/python3 # -*- coding: utf-8 -*- import torch import torchvision import torch.nn as nn import torch.optim as optim import torch.nn.functional as F import torchvision.transforms as transforms import numpy as np # from matplotlib import pyplot as plt import matplotlib matplotlib.use('Agg') import matplotlib.pylab as plb def main(): losss_dict = {} losss_dict["sgd"] = [] losss_dict["momentum_sgd"] = [] losss_dict["adadelta"] = [] losss_dict["adam"] = [] losss_dict["rmsprop"] = [] for key, value in losss_dict.items(): print(key) losss_dict[key] = calc_loss_list(key) plb.figure() plb.plot(losss_dict["sgd"], label='sgd') plb.plot(losss_dict["momentum_sgd"], label='momentum_sgd') plb.plot(losss_dict["adadelta"], label='adadelta') plb.plot(losss_dict["adam"], label='adam') plb.plot(losss_dict["rmsprop"], label='rmsprop') plb.legend() plb.grid() plb.savefig( '1_2_1.png' ) class Net(nn.Module): def __init__(self): super(Net, self).__init__() #nn.Linear(入力次元、出力次元) self.lin1 = nn.Linear(in_features=10, out_features=10, bias=False) def forward(self, x): x = self.lin1(x) return x def calc_loss_list(opt_conf): loss_list = [] # データ作成 x = torch.randn(1, 10) w = torch.randn(1, 1) y = torch.mul(w, x) +2 # ネットワーク定義 net = Net() # 損失関数 criterion = nn.MSELoss() # 最適化関数 if opt_conf == "sgd": optimizer = optim.SGD(net.parameters(), lr=0.1) elif opt_conf == "momentum_sgd": optimizer = optim.SGD(net.parameters(), lr=0.1, momentum=0.9) elif opt_conf == "adadelta": optimizer = optim.Adadelta(net.parameters(), rho=0.95, eps=1e-04) elif opt_conf == "adagrad": optimizer = optim.Adagrad(net.parameters()) elif opt_conf == "adam": optimizer = optim.Adam(net.parameters(), lr=1e-1, betas=(0.9, 0.99), eps=1e-09) elif opt_conf == "rmsprop": optimizer = optim.RMSprop(net.parameters()) # 学習 for epoch in range(20): optimizer.zero_grad() y_pred = net(x) loss = criterion(y_pred, y) loss.backward() optimizer.step() loss_list.append(loss.data.item()) return loss_list if __name__ == '__main__': main()

↑こう書くと、以下のような画像ファイルが作成されます