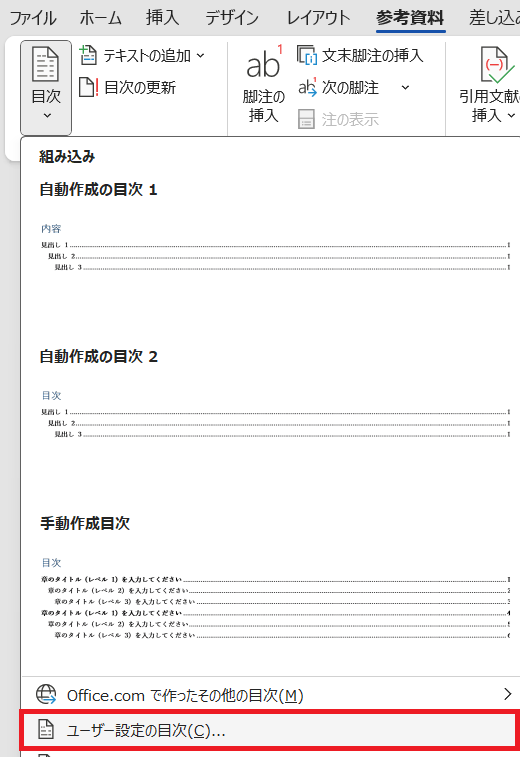

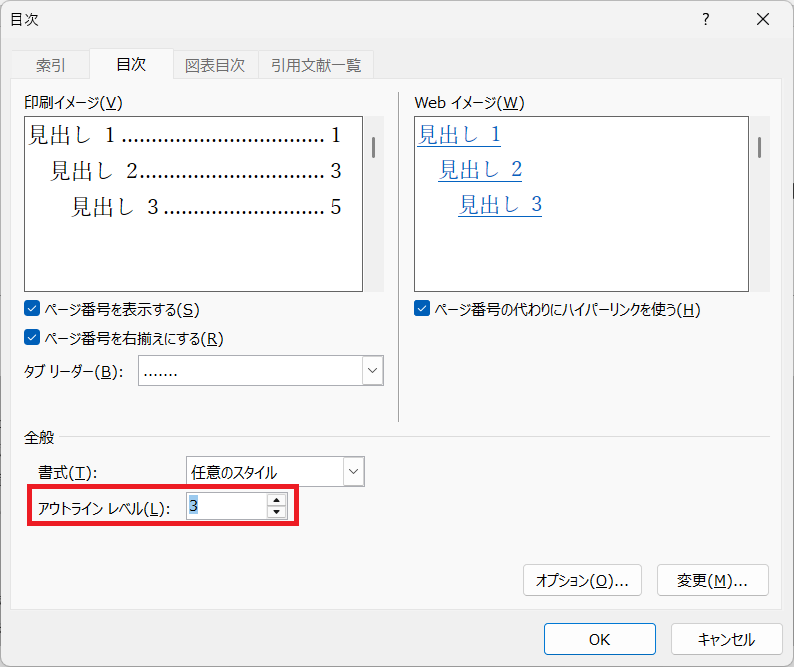

AutoCADの PUBLISHコマンドで、複数のdwgを連続してpdf化する為、 予めdir内にあるdwgファイル群の印刷スタイルを白黒 & A3横化

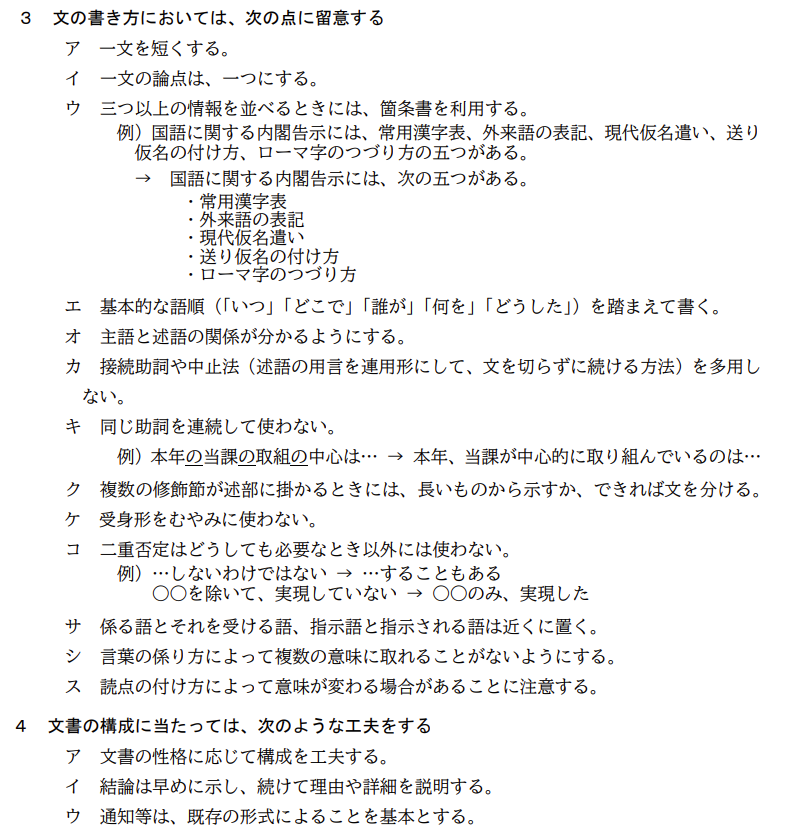

; SETPLOTCFG command ; Set plot configuration for DWG files ; - Printer: AutoCAD PDF (General Documentation).pc3 ; - Style table: monochrome.ctb (defun c:setplotcfg ( / dwgdir files f fullpath ) (princ "\nSTART SETPLOTCFG()") (vl-load-com) (setq dwgdir "c:/Users/end0t/dev/AUTOLISP/DWG") (setq files (vl-directory-files dwgdir "*.dwg" 1)) (foreach f files (setq fullpath (strcat dwgdir "/" f)) (set-plot-config fullpath) ) (princ "\nDONE SETPLOTCFG()") (princ) ) ; Open DWG and set plot config for non-Model layouts (defun set-plot-config ( dwgpath / acad docs doc layouts lay lay-name dwgname changed ) (vl-load-com) (setq acad (vlax-get-acad-object)) (setq docs (vla-get-Documents acad)) (setq doc (vla-open docs dwgpath)) (setq dwgname (vla-get-Name doc)) (setq changed nil) (princ (strcat "\n\nDWG: " dwgname)) (setq layouts (vla-get-Layouts doc)) (vlax-for lay layouts (setq lay-name (vla-get-Name lay)) (if (/= lay-name "Model") (progn (princ (strcat "\n Layout: " lay-name)) ; Printer/Plotter (vla-put-ConfigName lay "AutoCAD PDF (General Documentation).pc3") (princ "\n ConfigName: AutoCAD PDF (General Documentation).pc3") ; Paper size: ISO full bleed A3 (420 x 297 mm) (vla-put-CanonicalMediaName lay "ISO_full_bleed_A3_(420.00_x_297.00_MM)") (princ "\n PaperSize: ISO full bleed A3 (420 x 297 MM)") ; Plot area: Extents (1=Extents) (vla-put-PlotType lay 1) (princ "\n PlotType: Extents") ; Scale 1:1 (vla-put-UseStandardScale lay :vlax-true) (vla-put-StandardScale lay 16) (princ "\n Scale: 1:1") ; Center plot (vla-put-CenterPlot lay :vlax-true) (princ "\n CenterPlot: TRUE") ; Plot rotation: Landscape (0=0, 1=90, 2=180, 3=270) (vla-put-PlotRotation lay 0) (princ "\n PlotRotation: Landscape") ; Style table (vla-put-StyleSheet lay "monochrome.ctb") (princ "\n StyleSheet: monochrome.ctb") ; Use plot styles (vla-put-PlotWithPlotStyles lay :vlax-true) (princ "\n PlotWithPlotStyles: TRUE") ; Plot lineweights (vla-put-PlotWithLineweights lay :vlax-true) (princ "\n PlotWithLineweights: TRUE") ; Plot paper space last (vla-put-PlotViewportBorders lay :vlax-false) (princ "\n PlotViewportBorders: FALSE") (setq changed T) ) ) ) (if changed (progn (vla-save doc) (princ "\n -> Saved.") ) ) (vla-close doc :vlax-false) )